On May 13th, a new ChatGPT model called GPT-4o was announced. Compared to previous models, GPT-4o has twice faster response time than the previous models do and can understand text, images, audio, and video. In this article, we will provide a comprehensive explanation of the basic information about OpenAI's latest GPT-4o model and introduce how to integrate the GPT-4o API into your own services.

Apidog is also a completely free tool, so get started by clicking the button below! 👇👇👇

What is GPT-4o?

GPT-4o is the latest AI model announced by OpenAI on May 13th. The "o" in "4o" stands for "omni," meaning "all-encompassing." Unlike the previous text and image-based interactions with ChatGPT, GPT-4o allows you to interact with it using a combination of text, audio, images, and video.

For more details, visit the official website: https://openai.com/index/hello-gpt-4o/

Main Features of GPT-4o

So, what features does OpenAI's latest model, GPT-4o, have compared to previous models?

2X Faster Response Time

According to OpenAI, there are three steps required for GPT-4 to have a conversation with humans:

- Convert speech to text

- Generate response text

- Convert text to speech

In previous models, the average delay in this process was 2.8 seconds for GPT-3.5 and 5.4 seconds for GPT-4. However, the current GPT-4o can respond in as little as 232 milliseconds, with an average response time of 320 milliseconds, which is almost the same reaction speed as a human. In other words, using the latest GPT-4o model can enable nearly real-time interaction with AI.

Understands Audio Tone

Previous GPT models could not recognize the tone of the speaker's voice or background noise, causing some information to be lost in conversations. However, with the introduction of GPT-4o, it can now understand the speaker's audio tokens, or emotions, making it more human-like.

Token Reduction for Many Languages

Additionally, GPT-4o has compressed the number of tokens used for 20 languages, such as Japanese. Using Japanese as an example means that when using ChatGPT in Japanese, fewer tokens will be used.

- For the interaction "Hello, my name is GPT-4o. I am a new type of language model. Nice to meet you," the number of tokens used has decreased from 37 to 26, a 1.4 times reduction.

Other Important Information from the GPT-4o Announcement

In addition to the features of the GPT-4o model itself, the following information was also highlighted at the GPT-4o announcement:

Almost All Services are Free

Previously paid services like GPTs, GPT Store, and GPT-4 will be made available for free following the announcement of GPT-4o.

Desktop App Provided

While ChatGPT has only been available online until now, a new macOS ChatGPT desktop app was announced at the GPT-4o model announcement. A Windows app is also expected to be released in the second half of this year.

Understanding the Details of GPT-4o

If you want to know the detailed information about OpenAI's new AI model - GPT-4o, here you can check their video recording of its press conference out.

How to Access GPT-4o API?

When developing web applications, it's very convenient to use APIs to integrate AI functionality into your own services. With the announcement of the GPT-4o model, you'll need to use the GPT-4o API to introduce the outstanding capabilities of the GPT-4o model into your own services. So, is the GPT-4o API usable? How much does it cost? Let's take a closer look at these questions.

Is the GPT-4o API Available to Use?

According to the latest information from OpenAI, the GPT-4o model API is already available as a text and vision model in the Chat Completions API, Assistants API, and Batch API.

Updates of GPT-4o API

Compared to the previous ChatGPT model APIs, the GPT-4o API is considered better in the following areas:

- Higher intelligence: Provides GPT-4 Turbo-level performance in text, reasoning, and coding abilities, and sets new high standards in multilingual, audio, and visual capabilities.

- 2x faster response speed: Token generation speed is doubled compared to GPT-4 Turbo.

- 50% cheaper price: 50% cheaper than GPT-4 Turbo for both input and output tokens.

- 5x higher rate limit: The rate limit is 5 times higher than GPT-4 Turbo, up to 10 million tokens per minute.

- Improved visual capabilities: Visual capabilities have improved for most tasks.

- Improved non-English language capabilities: Improved processing of non-English languages and uses a new tokenizer to tokenize non-English text more efficiently.

GPT-4o API Pricing

So, how much does it cost to use this latest GPT-4o API model? According to the OpenAI API official website, GPT-4o is faster and more cost-effective than GPT-4 Turbo, while offering more powerful vision capabilities. This model has a 128K context and covers knowledge up to October 2023. Additionally, it's 50% cheaper than GPT-4 Turbo. The specific pricing plan is as follows:

According to the OpenAI API pricing page, the cost/pricing for GPT-4o is as follows:

- Text input: $5/ 1M tokens

- Text output:$15/ 1M tokens

And, the cost for vision processing (image generation) is calculated based on the width and height of the image. For example, the cost for processing a 150px high and 150px wide image is $0.001275. Users can freely ajust the resolution of image, and the price is basing on the number of the pixels.

So, whether it's input, output, or image generation, the cost of using the GPT-4o API is half that of GPT-4 Turbo.

Important Notes In Using the GPT-4o API

When using the GPT-4o API or trying to switch from other models to the GPT-4o API, you need to pay attention to the following points:

- The GPT-4o API can understand video without audio through the perception ability. Specifically, you need to convert the video into frames (2-4 frames per second, either uniformly sampled or using a keyframe selection algorithm), and then input those frames into the model.

- As of May 14, 2024, the GPT-4o API does not yet support audio modality. However, OpenAI expects to provide audio modality to trusted testers within the next few weeks.

- As of May 14, 2024, the GPT-4o API does not support image generation for a while, so if you need image generation, it is recommended to use the DALL-E 3 API.

- OpenAI recommends that all users currently using GPT-4 or GPT-4 Turbo consider switching to GPT-4o. GPT-4o is not necessarily more capable than GPT-4 or GPT-4 Turbo in all cases, so OpenAI suggests comparing the outputs and trying out GPT-4o to assess its capabilities before considering switching.

Easily Test & Manage GPT-4o API with Apidog

When using the GPT-4o API, tasks like API testing and management become essential.

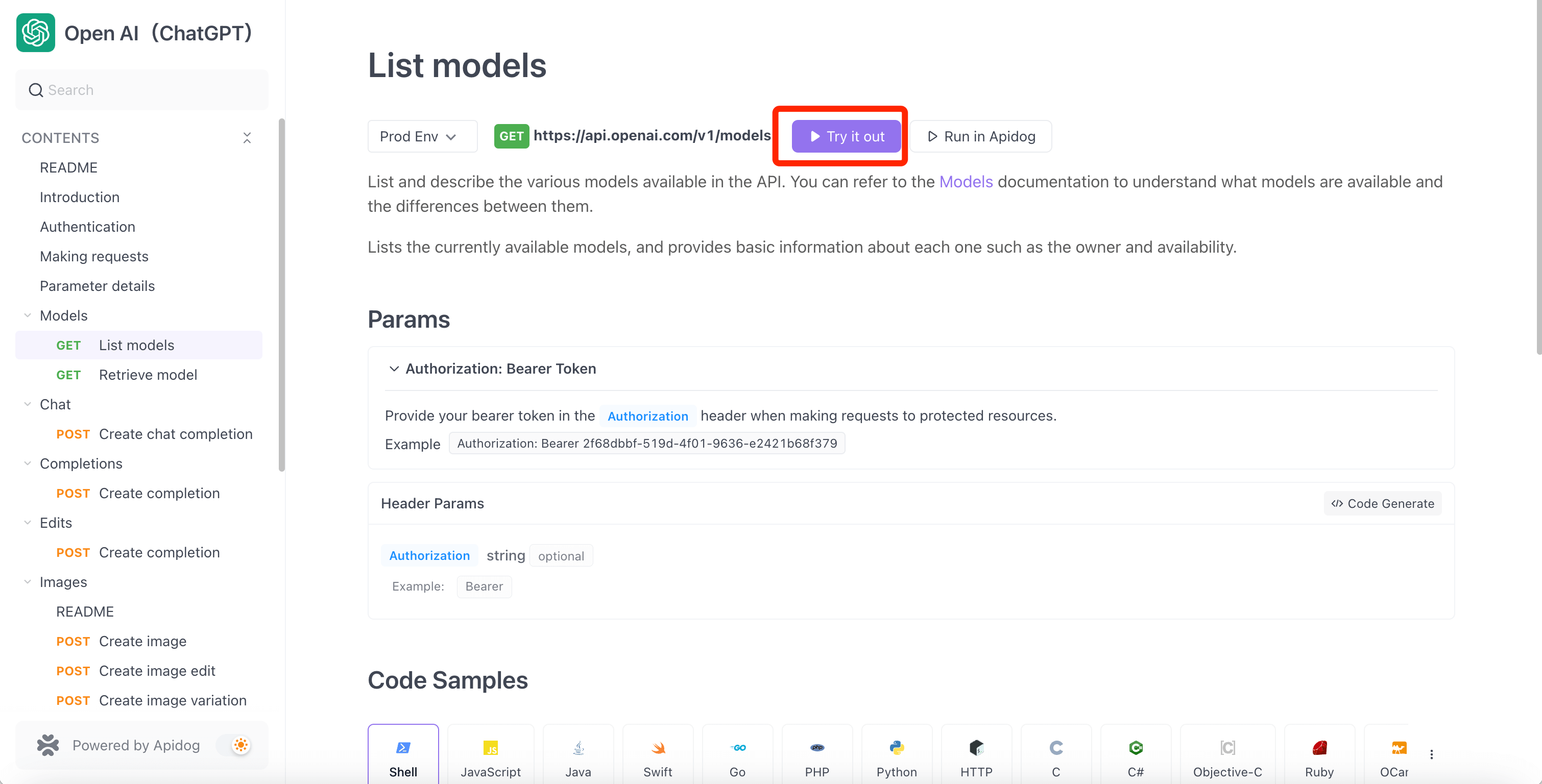

By using the most convenient API management tool - Apidog, you can handle any API more conveniently and efficiently. Since the GPT-4o API is already available, you can access the API Hub on Apidog and easily access the OpenAI API project. Then, you can clone the GPT-4o API project to your project, use and test the GPT-4o API, and manage it conveniently with Apidog.

Additionally, Apidog supports Server-Sent Events (SSE), making it easy to stream the GPT-4o API! For more details, check out this article:

Prerequisite of Using GPT-4o API: OpenAI API Key

To start using the GPT-4o API, you first need to obtain an OpenAI API token. Let's follow the tutorial below to get your OpenAI API key.

1. Sign up for an OpenAI account

To start using the GPT-4o API, you first need to create an OpenAI account. Access the OpenAI official website and click the "Get Started" button in the top right corner to create an account.

2. Obtain the OpenAI API key

After creating your OpenAI account, you need to obtain an API key for authentication, which is a requirement for using the GPT-4o API. Follow these steps to get your ChatGPT API key:

Step 1: Access the API Keys page on OpenAI and log in with your account (or create a new account if you don't have one).

Step 2: Click the "Create new secret key" button to generate a new API key.

After generating the API key, it will be immediately displayed on the screen. However, you won't be able to view the API key again, so it's recommended to record and securely store it.

Test & Manage GPT-4o API with Apidog

Apidog is an incredibly convenient tool for using the GPT-4o API. Apidog has an OpenAI API project that covers all the APIs provided by OpenAI. If you want to check the APIs provided by OpenAI, access the following page:

Currently, the GPT-4o API is only available in the Chat Completions API, Assistants API, and Batch API, so select each one from the left menu of the OpenAI API project to start using the GPT-4o API.

Step-by-step: Using the GPT-4o API with Apidog

When accessing the OpenAI API project on Apidog, you can easily test the OpenAI APIs by following these steps. Let's go through how to use the GPT-4o with the Chat Completions API.

Step 1: Access the OpenAI API project on Apidog, select the Chat Completions API endpoint from the left menu, and on the new request screen, enter the HTTP method and endpoint URL according to the ChatGPT specification. Then, in the "Body" tab, write the message you want to send to ChatGPT in JSON format.

Note: To use GPT-4o, specify the model as "gpt-4o" by including "model":"gpt-4o".

Step 2: Switch to the "Header" tab, add the Authorization parameter to authenticate with the ChatGPT API, enter the ChatGPT API key you obtained, and click the "Send" button.

Note: In Apidog, you can store your OpenAI API key as an environment variable. Storing the OpenAI API key as an environment variable means you can directly reference the environment variable later without having to re-enter the API key repeatedly, which is convenient.

Summary

In this article, we provided a detailed explanation of OpenAI's latest model, GPT-4o. GPT-4o has twice the response time compared to previous models and can understand text, images, audio, and video. Additionally, the number of tokens used for Japanese has been reduced, improving cost performance.

The GPT-4o API is available in the Chat Completions API, Assistants API, and Batch API, with features such as higher intelligence, 2x faster response speed, 50% cheaper pricing, 5x higher rate limit, improved visual capabilities, and improved non-English language capabilities compared to the previous ChatGPT model APIs.

To use the GPT-4o API, you first need to create an OpenAI account and obtain an API key. Then, with Apidog, you can easily test and manage the GPT-4o API. Apidog has an OpenAI API project that covers the GPT-4o API specifications, and you can store your API key as an environment variable to avoid re-entering it.

In the future, audio support will be added to the GPT-4o API. By taking advantage of the excellent features of GPT-4o and incorporating them into your services, you can provide an even better AI experience.